Year End Thoughts on AI Agents

What a year. I didn't think we would make it but somehow its almost 2026. For a variety of reasons, primarily motivational malaise I didn't really get a chance to write down many of my thoughts this year.

In my day to day job I worked on a lot of AI related projects this year. A lot. Naturally I have a lot of opinions on these Instead of doing any special posts I thought I would post a collection of takes I had throughout the year as a fun exercise to do doing the holiday break.

An Agentic Future?

Earlier this year "agentic ai" became a thing, both in practice and as a buzz-word. It is the new hotness that will change everything, or so every television ad about AI wants you to think.

Conceptually an AI agent execution allows a model to perform some task when it is either asked to do so, or thinks it needs to do that task. Models can perform a task by executing a tool. Tools can be defined and exposed through an MCP server. An MCP server is a pretty simple service that exposes a set of tools that can be invoked by an agent.

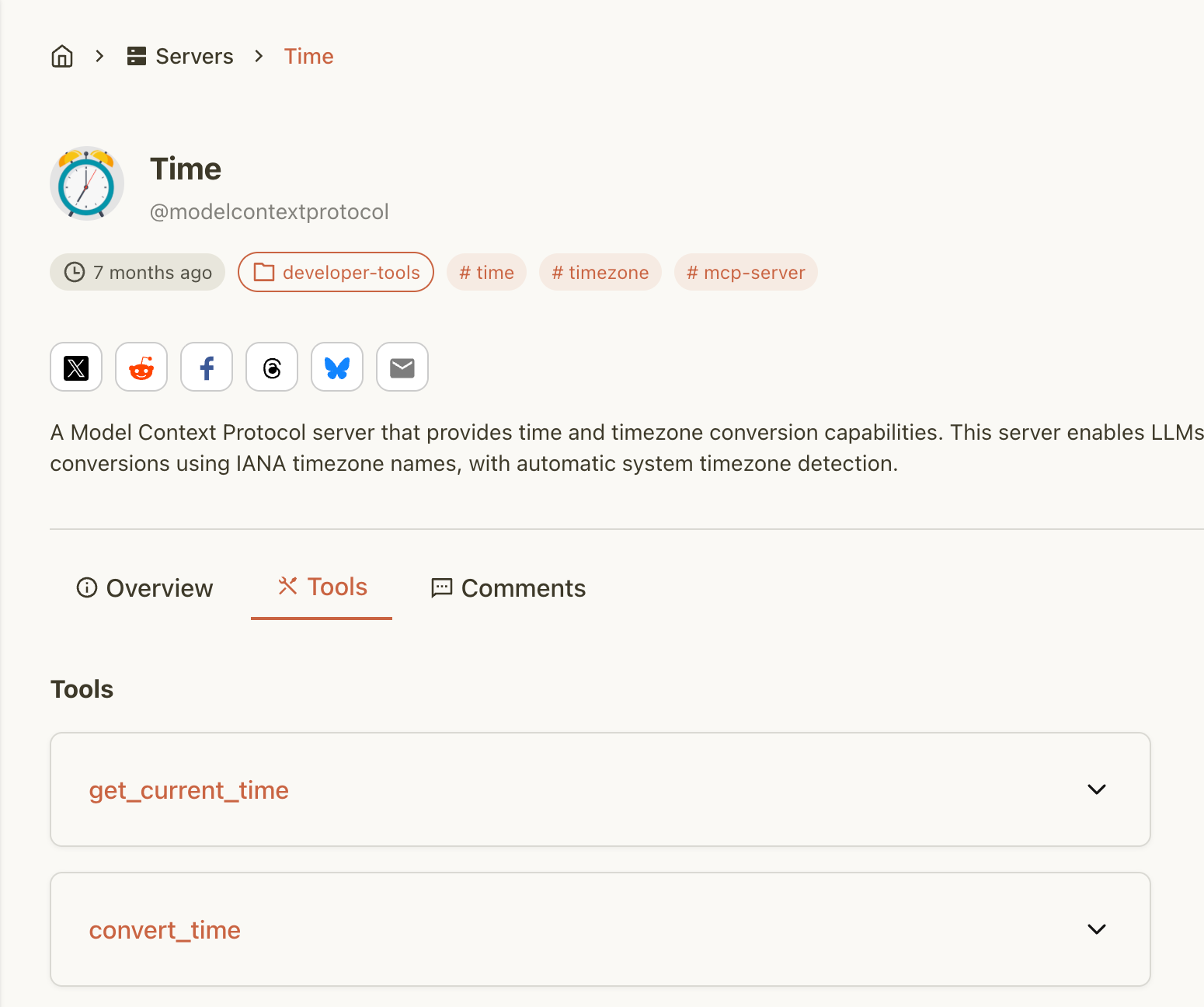

Here is a really simple example of a MCP server which exposes two tools:

If you look at the source code, it is pretty straightforward. It exposes functions to list tools, and code that executes the two functions above. For example, the get_current_time function looks like this:

def get_current_time(self, timezone_name: str) -> TimeResult:

"""Get current time in specified timezone"""

timezone = get_zoneinfo(timezone_name)

current_time = datetime.now(timezone)

return TimeResult(

timezone=timezone_name,

datetime=current_time.isoformat(timespec="seconds"),

day_of_week=current_time.strftime("%A"),

is_dst=bool(current_time.dst()),

)The input that is sent to this server is passed directly to these functions (in this case, a paramter named timezone_name) and that value is used directly in the datetime.now function. A tool exposed through an MCP server can be as simple or as complicated as the author wants.

A really high level example of how a full, end to end flow could be implemented is:

- A MCP is configured to expose a tool, for example "scan_code".

- The MCP server defines this tool and sets a specific parameter (e.g. code_block)

- A model is made aware of a MCP server that exposes the tools above.

- As part of the models execution it determines it needs to invoke the scan_code tool. It sees that it requires the code_block parameter and sends some text to this parameter.

- The model responds with output that fits the input the MCP server needs.

- Some component (not the model itself) sends the data to the MCP server.

- The MCP server executes a command, as defined configuration with this parameter

- The MCP server responds with the tool output, that output is passed to the model (as a new text input)

- The model proceeds to do some analysis on the results and proceeds.

So, thats a lot. Its a bit fuzzy too - since a lot depends on various frameworks that are used.

Securing The Probably Secureable

A grizzled grey-bearded security professional may look at this, step 6 in particular and scream something like "CODE EXECUTION AS A SERVICE" before passing out from a seizure. There is a lot of truth to that fear, this whole workflow has a LOT of areas where things can go wrong! Certainly more areas than a LLM chatbot would.

hat being said, I do find it a bit annoying when people jump into assuming something can't be done securely - you are being paid to secure complicated systems, not being paid to only tell people to bang two rocks together. One thing that I would encourage people to remember is that all of this tool usage is happening outside of the model. The model can trigger an interaction with sets of a tool or script but that execution happens in the normal world of computer science. It is possible to design tools in a secure way.

After spending quite some time this year looking at various solutions that follow a flow like the one above, here are some high level things I've learned to look out:

- Tools need to be defined with restrictive inputs. You cannot control the input that a model will generate, so you must treat this input as extremely tainted.

Consider the toy example I mentioned above, a tool called "code_scan" that scans code. It accepts an input (called "code_block"). The MCP server accepts a JSON input like:

{

"code_block" : "code sent from the model"

}

Lets say you have a binary called codescanner that reads lines of code and performs some analysis on it like this:

/bin/codescanner --inline-text "<inputfrommodel>"Potentially an input from the model that contains a single quote (e.g. text "; cat /etc/passwd could break out of the context of the command above and you would get code execution on the server the tool runs on.

As a pentester, you need to look at each tool, each input and determine if malformed input could trigger an unexpected . Doing this in runtime, through a model is very difficult, you pretty much need to review the source code or at least directly test the MCP server to perform a through test.

- The MCP server needs to be secured with authentication, authorization, logging etc

As described above, if you can call an MCP server you can execute a tool. The server either needs AuthN or needs to be in a extremely restrictive network location. Ideally you want to secure the interaction between the model server and the MCP server with something like mutual TLS.

The AI Agents part of Cloudflare talks about Authentication and authorization and exposes a set of options for implementing it.

The documentation for AuthN/Z and Security in the offical model context protocol docs are in comparison a bit lacking.

- The MCP server should not hold privileged credentials of content. Consider it a tainted box.

- The MCP server should not be logging input that the model itself would not log.

I personally do think that you can implement enough controls to secure a given tool interaction via MCP. If the input to a tool is limited enough, and the code of that tool execution is designed in a way to limit abuse it can be done pretty securely.

Outside of security, there are a lot of problems that people often don't think about.

Keep it (Extremely Complicated), Stupid!

If popular culture is to be believed everyone at every corporation is migrating to workflows powered by AI agents and super-charging their productivity. Maybe they are, maybe they are not.

What I can tell you is that the more complexity you add into any process the harder it is to manage and the harder it is to secure. There so many moving parts and security considerations to consider with what is happening with these agents that its hard for any security team to evaluate the risk unless they understand the mechanics of it all.

Consider a company where the team performs a task that requires someone to make changes to an excel sheet. This is replaced by an AI Agent workflow that does the same thing, using a tool execution to trigger this change. Here is a short list of "what-if" scenarios I would be worried about:

- What if the workflow stops working, how many people can debug it or fix it?

- What if the person who designed the prompt/agent flow leaves the company, can anyone else fix it?

- What if the format of the excel sheet changes - does anyone know which components (prompts, MCP server, tool definition) needs to be updated?

- What if a minor change happens in the expected change in the excel sheet, how many people could change the tool definition?

- What if there a vulnerability introduced in the MCP server or agent framework, are there steps someone can take to manage that system?

- What if the model provider deprecates the version of the model you are invoking - and newer versions do not return data in the format you want?

I've reviewed systems that have components and scripts that haven't been changed in twenty years - but still work perfectly fine. It is pretty unlikely that a year from now the eco-system of AI and AI Agents is the same as it is today. That isn't even accounting for the fact that everything about this is non-deterministic!

How good is good enough?

There has been a specific point that has kinda be logged in my head for the last several months, it is probably a big driver for me writing this at all. That point is, how good does something need to be in order to be trusted to autonomously perform a task? I don't mean from a security or safety perspective, but how good does it have to be in order to fulfil its primary business function?

In certain industries - that threshold is pretty much 100% of the time. You would want a autonomous transit signalling system to work correctly 100% of the time. In business, technology in particular you can slap together something that meets the bottom bar of "working", claim the reward and move on.

Here is a workflow I've seen played out countless times:

- A process exists which performs a important business task.

- A plan is made to automate this task, eventually something is completed that shows nice baseline results and gets 90% of the way there.

- The tool/automation is shared with the people who perform the task:

- People are initially amazed, individuals who made the automation are showered with praise

- Documentation is updated to point people to the new tool/automation.

- People start using the new automation, they realize since it only handles 90% of the task, it needs to be done in conjunction with whatever the old process was.

- People discover more and more edge cases where the automation does not work.

- Quickly managing the automation in conjunction with the actual delivery requirements becomes a burden.

- Time goes on, the business requirements change and the automation no longer works.

- No one updates the automation to handle the new situation, it becomes abandoned.

Obviously there are many, many success stores where automation has improved things at a organization - but it requires through planning and constant maintenance. The 10% really matters! It is not something you can just ignore. If you have ever been responsible for maintaining documentation you will know that any mildly complicated task is actually a collection of edge-cases in a trench coat.

A question you have to ask is, how many mistakes or failures can your workflow tolerate? If you replace a process that is able to consistently, but slowly generate workable outputs with a system that generates workable outputs 90% of the time very quickly - you better hope that this workflow is able to handle these failure cases smoothly.

A lot of public discussions around AI Agent workflows show-case complex tasks being performed via agentic interactions and people present these solutions as easy, turnkey automations that will kick-start every corporation. I think this is fantasy. You are trying to wrangle a non-deterministic thinking machine into invoking a script to do a task when you, as an organization was not able to do the "invoke a script to do a task" part of it alone!

Even in an organization where really smart, really up-to-date people are working on that integration the fluidity of requirements makes the problem very hard to deal with. The fuzzy parts, where these agents interact with parts of your process or workflow are knots that might be impossible to tangle consistently enough to make it worth it. Even if you get it to work, the complexity of it all makes for an extremely fragile solution. A solution where even minor functional changes can trigger a lot of pain.

(Over)Confidence and Fear

One thing that the current wave of AI products has done a excellent job at disseminating is confidence. Models return answers with a casual confidence, everyone who talks about AI speaks as if they were cowering before a monolith of magic that will surely fix any problems presented to it.

If you (or your exec suite) see the way people talk about AI, or AI Agents in general in the public discourse its easy to become fearful that if you aren't jumping in head first, you are falling behind. You simply must rip up your perfectly functional workflows and processes to jump into a complicated, subscription based workflow that may or may not reach parity with your current solution

I also feel the same way about the confidence in which people talk about how AI will empower people to do a task they have no knowledge of or affinity towards. Like, your company has a 10 step interview process to hire a junior developer but actually you are going to be A-OK letting a non-technical manager push code via an AI tool?

I'm skeptical, but I maybe I'm just wrong. I suppose if I write another post at the end of 2026 I can reflect on it. Worst case, I'll be burning a printout of this one for warmth after AI Agents. Happy Holidays!